4 June 2020

At the RSA conference in 2019, Etienne Greff and Wicus Ross – two of my esteemed colleagues from the Technology and Innovation team within Orange Cyberdefense – presented some of the research they had done into how AI could be used maliciously. Having spent part one of this blog exploring what AI and its limitations are and part two focusing on defensive applications of AI and the requirement to keep humans in the loop, it is time now to focus on more nefarious uses.

Etienne and Wicus experimented with topic modeling and the introduction of such features by Microsoft. They explored how – from an attacker’s perspective – AI just happens to be really useful (more useful than from a defender’s point of view, they surmised). And this makes sense, attackers have to keep moving and to continue to evolve to try and stay ahead of our defensive measures. Their victims meanwhile, continue to be creatures of habit and so, when attackers know what they are looking for, why wouldn’t they remove much of the manual work from the process. As with Microsoft features of the near and distant past (from email attachments to Macros) they could put them to work for the attacker’s benefit. Only this time, on a whole new scale.

At the time the research was conducted, this feature set was not yet generally available. We were inspired to do something that might be possible on Windows, but with what was available.

With Etienne’s help and knowledge of Topic Modelling, Wicus set out to write code in Python (SKLearn modules, etc) that was made to run on Windows. Not using Windows libraries, but still portable and functional on all versions of Windows available at that time.

For more info on Windows ML see: docs.microsoft.com/en-us/windows/ai/windows-ml-container/getting-started

The premise of the research was quite simple:

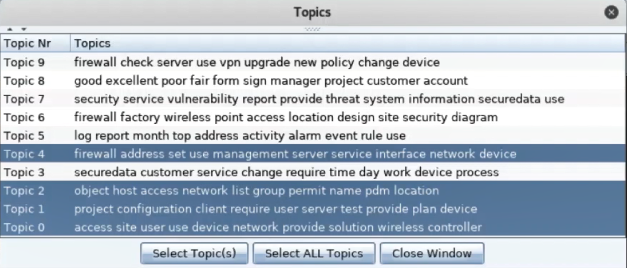

Fig.1 – Pick your topics! Our lead researcher used Cobalt Strike to run topic modeling on the “victim” machine

The experiment was successful and demonstrated the capability to target the interesting information quickly, efficiently and also to then selectively exfiltrate the data. Much less suspicious than having to exfiltrate all the data to examine later on to see if you had managed to get anything interesting.

We will come back to Wicus and his adventures with topic modeling shortly. But, first a quick diversion. A question I wondered to myself when really getting into the details of AI was: “Had anyone demonstrated breaking AI models in security?”. I had seen examples of next-generation AV being evaded but could someone break the model entirely?

Papernot et al, in their paper “Practical Black-Box Attacks against Machine Learning”[i] explored the premise of not only trying to attack machine learning models but to do so without any knowledge of the machine learning model in use. So a “black box” rather than a “white box” scenario. And how to achieve this? Quite simply, to leverage APIs. APIs are essential in the modern cybersecurity technology landscape. How many times have you had a vendor in to present their product and use the phrase “and of course, we have an open API”. All in the spirit of interoperability and flexibility.

Unfortunately, this flexibility might also be exploited. In the old days, it might have been hard for hackers to get their hands on our defenders’ technology. Now, how many security “devices” can you simply buy from the Amazon or Azure marketplaces? How to stop a cybercriminal spinning up the newest AI-enabled defensive platform and throwing some data at it. If you want more detail on this, click here. The summary is this – if you have some test data and enough time, you know the input and via the API you can assess the output. Meaning that you can learn over time how the AI makes decisions and eventually, get it to make the decision you want it to.

The ability to mimic or recreate machine learning models used by cybersecurity tools is still quite theoretical. Why? Well, it’s as simple as this – attackers don’t need to be that sophisticated. There is still a lot of old technology out there.

What is even scarier is the way that the digital transformation is opening up way more attack surfaces. And reinvigorating some old ones. Let’s take domain squatting. Remember our friend Wicus from the Orange Cyberdefense research team? And how he was playing with topic modeling? Well, the first example still required access to the end-users machine and, therefore, was still there to be found if the defending organization had the right tools in place (endpoint detection and response for example). But what about something that didn’t need access to the end-user machine? What if we could get lots of data without the hassle of infiltrating a network or compromising users’ machines?

That was the next trick. Taking six prominent real estate firms, registering a lot of domains that were slight variations on their legitimate domains (typosquatting for those who aren’t familiar) and setting up a mail server to receive email from our newly owned domains, all we had to do then was wait.

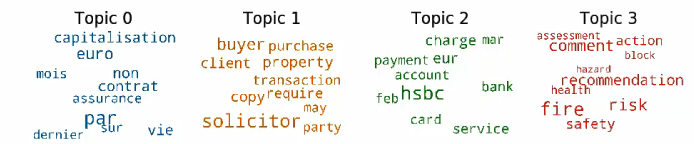

Many emails rolled in as people mistyped email addresses and from there, we would again turn to topic modeling. For again we wanted to look into only the emails of interest. And believe me, we collected a LOT of emails.

Fig.2 –Topic modelling a truck load of emails

Again, the process proved to be very efficient. And also, that big data mining is not just useful to commercial organizations but to cybercriminals too.

Many companies are conducting domain monitoring but many, many more are not and probably have not even thought about it. But when it is such an easy route to your clients and your data, and as our digital footprints grow, I would suggest that ignorance is not bliss on this topic.

In summary of what we have explored in this blog series, I would like to end with this thought. The cyberwar continues and the soldiers are still the same on both sides. They did not (yet) get replaced by robots. They just got bigger and better weapons. And you are going to need someone who knows how to use them because the enemy already does. If you haven't already, check out part 1 and part 2 of this blog post series.

[i] Papernot et al, Practical Black-Box Attacks against Machine Learning