Incident Response: Acceleration through Automation

Author: Samantha Caven, CSIRT Analyst

Digital Forensics and Incident Response require immediate counter-reaction against a wide range of cyber-attacks. Collecting & analysing artifacts quickly and efficiently is a key factor in understanding a threat actor’s point of entry, plan of attack, and mitigations for future protection. However, this can be a complex and lengthy procedure. Here we drill down into scripting and automation of some of these elaborate processes to improve our response times and efficiency of our analysts.

A common problem

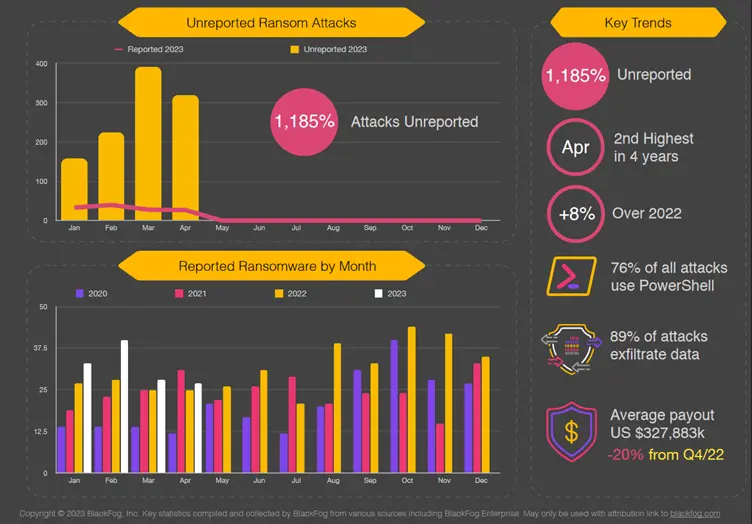

Windows PowerShell. The powerful, coveted, and cross-platform tool that can automate almost anything. Great for admins to automate repetitive tasks through its scripting capabilities. Although it was originally built to do good, threat actors know the power of PowerShell too and have been exploiting it to do their nasty bidding. This is because PowerShell is an allow-listed tool which makes the perfect tool to escalate privileges and execute malicious scripts. Mostly used in post-exploitation, PowerShell can be used maliciously for domain discovery, lateral movement, and privilege escalation. Furthermore, it can also deliver fileless malware, which uses a machine’s native tooling which runs on memory. In May 2023, The Stack published a report that claimed PowerShell was used in 76% of ransomware attacks.

Cobalt Strike is famously known for its $makeitso variables, and the ransomware group Vice Society use a custom-built script called w1.ps1 which is used to exfiltrate data. These are just two common examples of the malicious use of PowerShell, and what makes all this worse is the fact that PowerShell has limited, if any traceability, which is what makes it so desirable to use.

However, there are some silver linings we cannot ignore too.

Powershell for good use

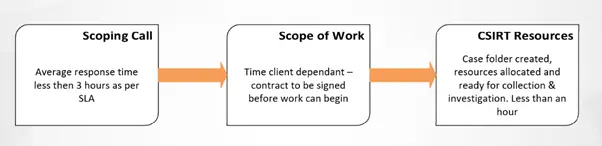

When we are alerted of a potential case, we ensure a scoping call takes place to confirm the severity of the situation and which of our services is more is best to deploy. Regardless of which services are chosen, we need to be able to get things quickly and efficiently into place to be able to accept and/or extract data for a customer and for that to be in a safe and secure place. Previously, this used to be a manual task that took up some time.

Now, we utilise the automation of PowerShell which seamlessly integrates with applications such as Teams and Active Directory. This means that with a single command, we can generate case folders, allocate resources as we need them and create a platform for our customers to either send us the data, or for us to extract it. That single command is called: Casebot.

Casebot is a PowerShell script that can be integrated into Teams by using the Power Automate feature. This allows us to quickly create our individual case folders for customer using a variety of case names, as well as add resources such as our CSIRT Analysts on a case-by-case basis, meaning other analysts can’t access a case they are not investigating. This allows us to easily manage multiple cases on the go without any overlap of these resources. Furthermore, Casebot can generate SFTP passwords that can be sent directly to the customer, from one single place.

Logging the logs

We can’t investigate or analyse what isn’t there. You hear this all the time, LOG LOG LOG! But at what point is it too much to handle? Some would say we have already reached that threshold. But logging PowerShell is vitally important when it comes down to incident response. If the logs aren't there, we simply can't put all the pieces back together to figure out what happened. There are three main ways to log PowerShell activity:

- Module logging - This log type tracks PowerShell modules that have been triggered (think Get-Execution Policy) and are logged under event ID 4103. They do provide some information but don’t always reliably capture executed commands.

- Transcript logging - These record an entire script that has been executed during a PowerShell session and stored in the Documents folder of that user as a text file. This text file mostly contains metadata and timestamps of scripts that were executed that can be useful in analysis, however most of the data is captured in module and script block logs.

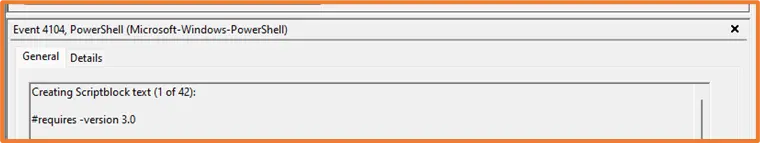

- Script-block logging - This is the event we are most interested in. Event ID 4104 is blocks of scripts and cmdlets executed and record the entire script that is run and who has triggered them. This does lead to some trouble when it comes to putting the scripts back together, as log files are restricted and therefore a large script is cut up into pieces to be logged.

If you had to choose one PowerShell log out of the three, I'd recommend script block logging. Why? Because that is the one that can capture that nasty w1.ps1 script mentioned earlier from Vice Society and help piece together vital IOCs that can be used for YARA, SIGMA and XDR ruling in the future! In early 2023, Unit 42 found the Vice Society script, and were able to recover it from the Microsoft Windows PowerShell Operational log, because they had Script Block Logging enabled. However, piecing it back together would not have been an easy task, until now!

An automated solution to an automated problem

Some threat actors have also taken note on how automation can help with complex processes, have automated their ransomware scripts! Which makes running their tools so much faster. Which is a scary thought. This is why logging PowerShell is becoming more important: you can’t catch what you can’t see.

The main issues working with event logs are retention and size. If a lot of PowerShell scripts are being executed in a small space of time, the event logs may only have retained partial script blocks. Although this isn't ideal, a lot can still be determined and understood even with that small piece of information. When a large script is run in Windows, its stored with Event ID 4104, and at the beginning of the log it will tell you the size of the scriptblock:

In this example, this scriptblock takes up 42 separate event log files. The above script being run is native to Windows, a completely normal operation. Can you imagine the size of the script blocks when malicious actors are wreaking havoc on a network? An analyst will then need to manually piece those 42 bits of text together in the hopes of understanding what it was doing.

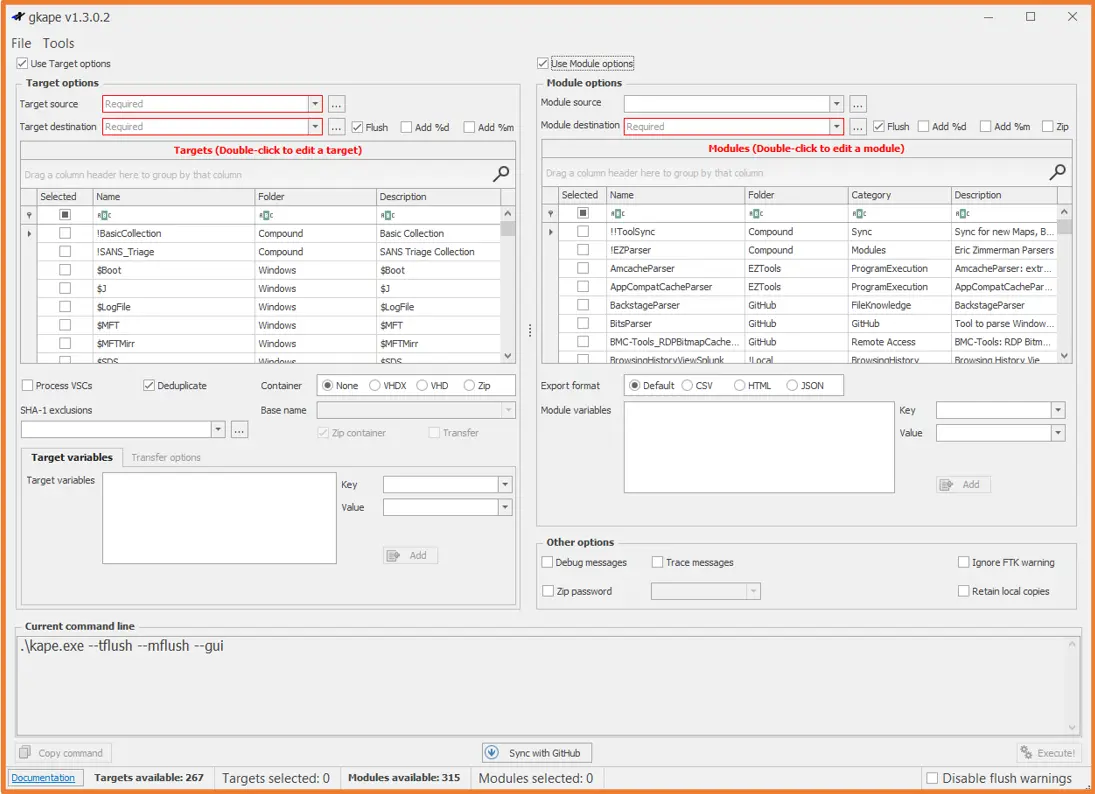

Here at Orange Cyberdefense CSIRT, we use KAPE to collect our artifacts prior to our analysis stage. KAPE is a configurable triage program which both collects and processes files. This processing can be done with other programs such as Eric Zimmermans tools but can also accept third-party processes as well as new targets and modules. The targets are used to instruct KAPE to identify which files to collect and where to find them. While the modules are the tools or parsers used against collected targets. KAPE collects event logs by default, as they are very useful in DFIR, so the next step is to find a way to parse the Microsoft-Windows-PowerShell/Operational log, and piece it back together into one readable file.

GitHub hosts all sorts of weird and wonderful tools, and that is where I stumbled onto a script created by Vikas Singh at Sophos in 2022 and can be found here. The original script is standalone, meaning it runs completely independently from other tooling, and more importantly only collects the logs of the host it is being run on. Additionally, it is written in, you’ve guessed it, PowerShell! The task presented to me was to find a way to integrate it into KAPE and point it to the location of collected data from IR engagements to piece our event log puzzle back together.

You call it development; I call it experimenting because it makes me sound like a scientist. Here we have an independent script, that pulls from the host, and I need to work my way through it line by line to understand how it does that, to figure out a way to get it to the same thing just to a different path. The first step is to figure out how to integrate it into KAPE so it can be given some parameters such as the input path of our collected artifacts, and an output path on where to store the parsed data. KAPE allows a user to use either the command line to run it or use a GUI. The GUI is useful for getting used to the tool itself, as it will tell you if something isn't quite right before running, and you can see all the targets and modules available to you which can be helpful.

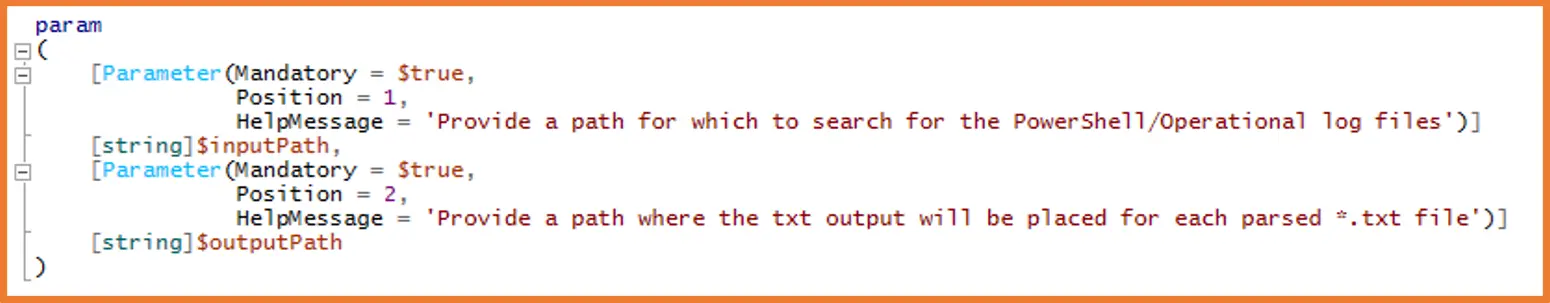

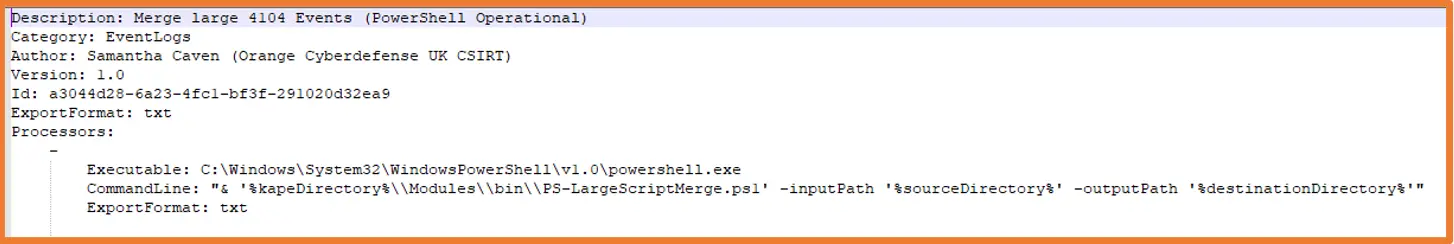

PowerShell has quite a few fundamental components, and one of them is called parameter. This is the component that makes it possible to give any script inputs at runtime, meaning we can change our paths prior without changing the main, underlying code. The param block, is what we will use to allocate our input and output paths within KAPE, as these two values will change with each case and each machine we want to investigate. With this block added, we will also need to create a module file within KAPE (this has the .MKAPE file extension), which tells KAPE which values to use with our module, where to put each one and where to find our script to run it. After some research and testing, the first step is complete, with its accompanying MKAPE file, it looks a little like this:

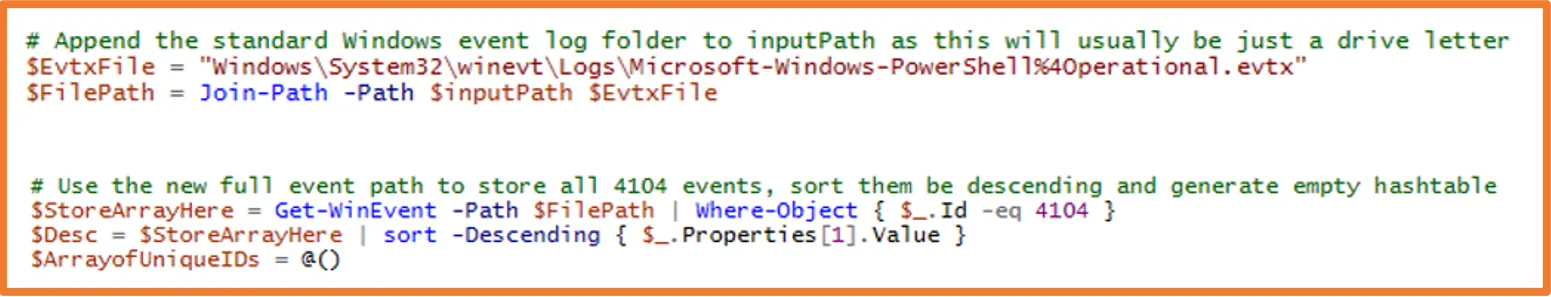

Now onto our next issue, currently this script only pulls the logs of the machine it runs on. What we need it to do is be able to find the path to our collected machines and extract the PowerShell event logs. Windows PowerShell has different ways to locate files and folders, in the case of our script, we need to supply PowerShell with a static path that will be combined with our ever-changing input path. Once we join these paths together, then we can tell PowerShell specifically which ID’s we would like to extract, and it will iterate through the entire event log and pull out all 4104 event IDs:

The next step is where the magic happens, the section of code that will turn our messy, chopped up event logs into one easy to read file for each unique script block found within the PowerShell Operational event log. To do this, we store our filtered event IDs into an array. Then using the different properties found, we focus our sorting to the scriptblock ID number, and append them to each. Prior to being sorted they are put into descending order. This allows the script to quickly iterate through our array and puts everything into the correct order in our output file. The script will do this for each unique scriptblock ID until there are none left. Once each ID is stitched back together, the script will use the scriptblock ID as the name of the file and output it to our output path we supplied at the beginning.

Winding Down

When investigating a cybersecurity incident, the golden hours of Incident Response are critical to understanding a threat actor’s point of entry, their level of access and the actions required for containment. Here at Orange Cyberdefense, we are using Automation to reduce the burden of Case Management on our analytical resources and accelerating the time in which we can collect and analyse digital evidence.

PowerShell and automation techniques are being used both by threat actors and Incident Responders. For us it improves our response times and aids in case management. For threat actors, they can automate reconnaissance, exfiltration and ransomware which accelerates their time from initial access to extortion.

If your environment does not use or need PowerShell, is it highly recommended to disable it. No use having something active if you’re not utilizing it, right? This provides an extra obstacle for threat actors to fumble with which may cause some accidental noise and thus be detected earlier.

If you are using PowerShell, you need to start logging as much as possible, with script block logging being on top of your list. If your environment were to be attacked, you have some hope of being able to find out the how and the why, as well as any further containment techniques.