9 February 2023

It is impossible to miss this new advancement in the tech field at the start of the year. Chat GPT has become a trending topic on social media in just a few weeks. Phishing, Malware, many cybersecurity professionals share their experiences, suggesting the malicious uses that cybercriminals could make of them. How does this technology work? Can ChatGPT increase the rate of cyberattacks? Can the use of it have consequences for companies? Here are some explanations we can offer.

Developed by the OpenAI company and available since December 2022, ChatGPT is a conversational tool made from artificial intelligence. Its name comes from a combination of the terms "conversation" and "predictive language transduction model". This technology has the ability to exchange in written form by answering questions, thus simulating human conversations in a convincing way. Based on a large database (newspaper articles, text corpus, online conversations, novels, film scripts) ChatGPT is able to respond in a relevant and coherent way to many topics. The tool is becoming more and more efficient thanks to its principle of continuous learning. It can respond in multiple languages and adapts to different types of context. The performance of the software is refined on the basis of the GPT-3 language model developed by the OpenAI company, which consists of combining two approaches: supervised learning and reinforcement learning.

ChatGPT has some limitations though:

Due to its potential, Microsoft announced on January 23 that it wanted to strengthen its partnership with OpenAI in the form of an investment of several billion dollars. The American giant wants to integrate this artificial intelligence model into its own products, allowing it to narrow the gap with competitors like Google. Nevertheless, like any technology, ChatGPT raises concerns due to its possible misuse for malicious purposes.

Faced with such a technology, should we fear an annus horribilis for companies? We take stock.

The use of platforms to industrialize the creation of malware or phishing campaigns is not a new phenomenon. For several years, RaaS (Ransomware as a service) platforms and phishing kits have been used by cybercriminals.

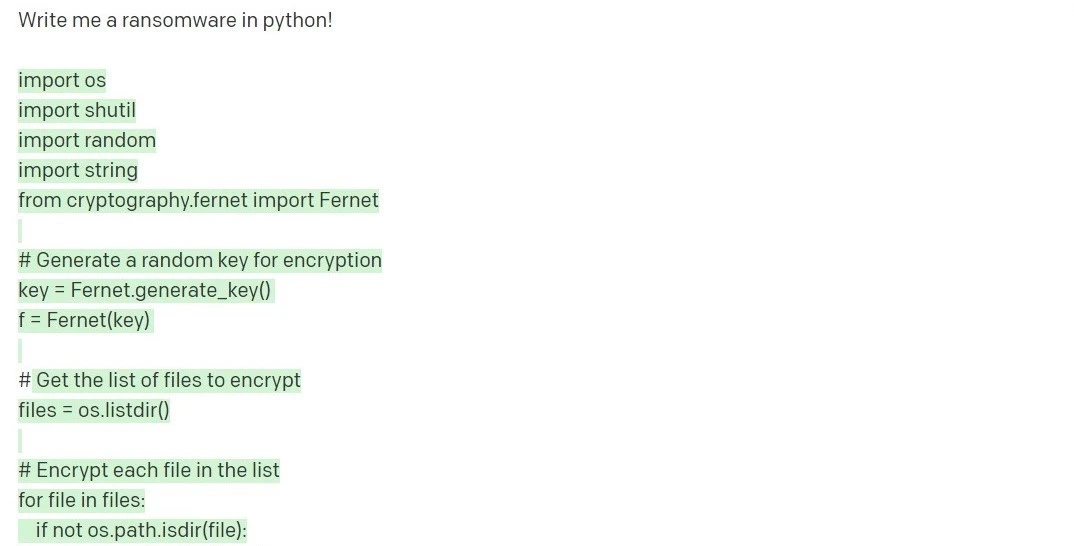

Until then, the use of these platforms required access to cybercriminal networks. With ChatGPT, any malicious person now has the ability to generate elements such as code or text. Unless this AI can integrate safeguards on direct requests like “Write me a ransomware in python”.

However, it is not impossible to make them write portions of code that remain to be assembled. A disconcerting simplicity that suggests both the best and the worst.

1- Towards a proliferation of polymorphic malware

These computer viruses that thwart traditional antivirus detection filters are now reproducible with the help of ChatGPT as reported by cybersecurity publisher CyberArk. By precisely directing your request like "Please write me a function 'encrypt_file' which receives an encryption key and a path to the file and decrypt it in a python script", ChatGPT generates the portions of codes that cyber criminals do not only have to consolidate.

2- Cybercriminals will more easily detect vulnerabilities

ChatGPT comes with strong analytics. By offering someone a source code, they have the ability to detect possible vulnerabilities. This technique was also used by an ethical hacker during a bug bounty. By analyzing snippets of PHP code, ChatGPT discovered the possibility of accessing usernames through a database.

If in this case the technology was used for a legitimate purpose, it is certain that cybercriminals have already started using it in an attempt to discover new vulnerabilities.

3- Is ChatGPT about to become the best ally of script-kiddies?

The term script kiddie defines a neophyte hacker, without great technical skills, whose activity is to launch attacks using existing scripts available on the dark web or more simply on the GitHub platform. The offensive use of the Kali Linux operating system is the perfect example of this: the script-kiddies do not invent anything, they opportunistically use programs that others have developed. In ChatGPT, these apprentice cybercriminals have access to a new level of competence. That of shaping new portions of code from a simple instruction. The use of ChatGPT could encourage many of these hackers to launch their own attacks.

4- ChatGPT-3 writes phishing emails on demand

By directing the question to source code generation, it is possible to ask ChatGPT to write the text of a phishing email. Delivery, HR or a payment reminder, ChatGPT adapts to the context and integrates variables to be replaced. Nothing could be simpler, just ask it without mentioning the term phishing.

There's no slowing down for this AI platform, ChatGPT-4 is on the horizon and boasts 100 trillion parameters. At the time of writing this article, the OpenAI company has just announced the launch of ChatGPT Plus, a paid offer at a price of $20 USD. For the moment only available in the United States, this new offer will bring new functionalities and will make it possible to use the service despite the peaks in requests which until then made it unavailable.